Pipeline Pilot

Simplify Enterprise Science & Engineering

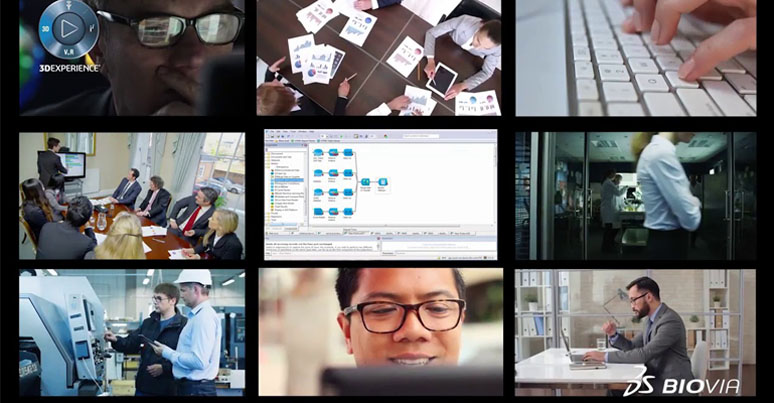

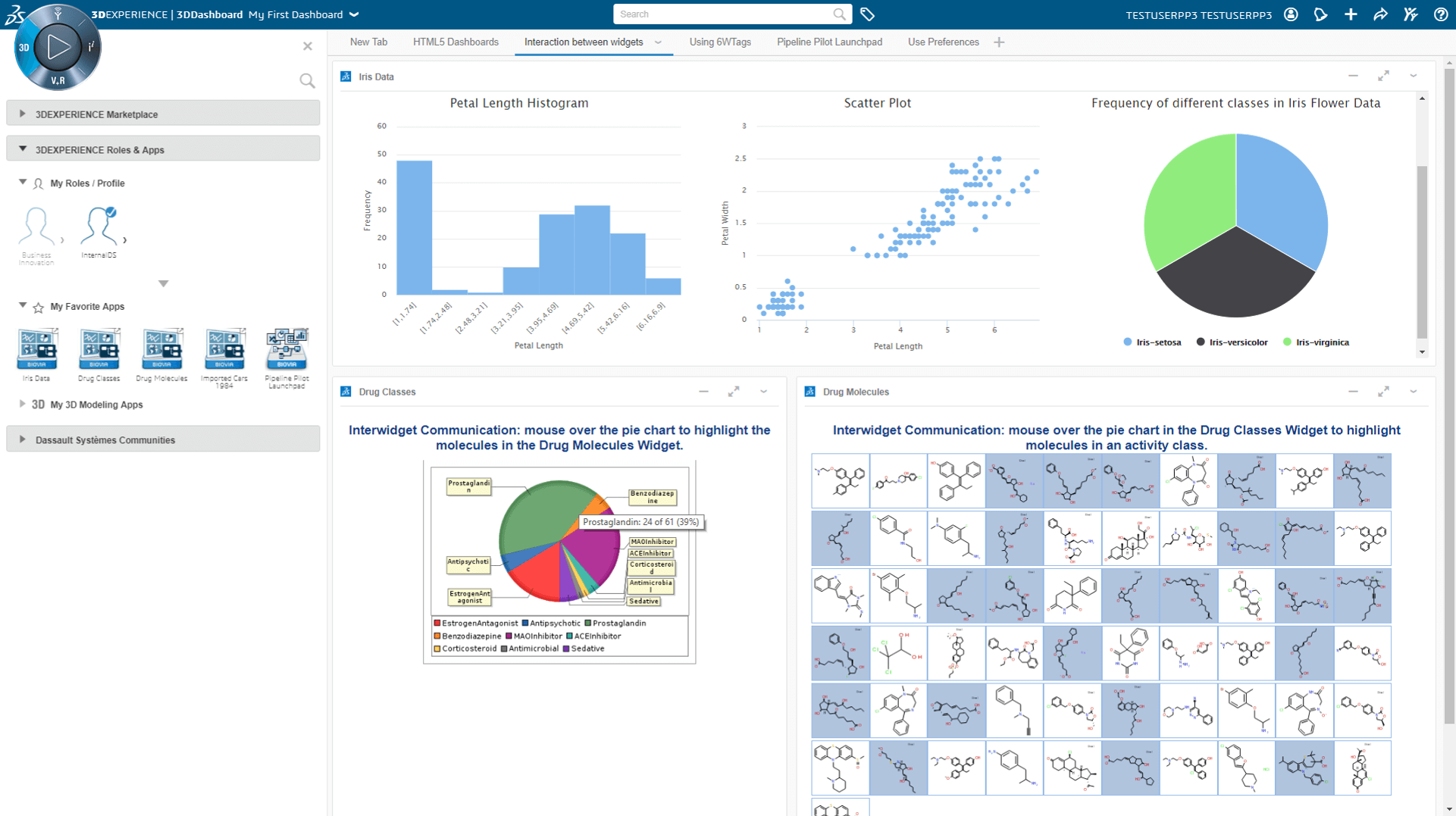

Pipeline Pilot streamlines the building, sharing, and deployment of complex data workflows with its graphically based, code-optional environment purpose-built for science and engineering. Developers can rapidly create, share, and reuse complex workflows. Data Scientists can automate data access, cleaning, and model creation, employing Molecular Docking, 3D QSAR, and ZDOCK techniques to enhance their work. Researchers can augment their work with the latest AI and machine learning tools, such as QSAR Software and Computational Chemistry Applications. Business leaders can quickly and easily interpret results in interactive custom dashboards, utilizing Discovery Studio Visualizer, ADME Process analysis, and 3D Molecular Structure visualization.

Enough Talk Let’s Build Something Together

Advanced Data Science, AI, and Machine Learning: Democratized

Pipeline Pilot allows data scientists to train models with only a few clicks, compare the performance of model types, and save trained models for future use. Expert users can also embed custom scripts from Python, Perl, or R to maximize their use across the organization, integrating Cheminformatics and structure-based drug design techniques.

Enterprise Data Science Solutions: Faster

Pipeline Pilot wraps complex functions in simple drag-and-drop components that can be strung into a workflow. These protocols can be shared between users and groups for reuse, ensuring that solutions are developed faster and standardized across the organization, making use of LIMS Software, Discovery Studio Visualizer, and Pharmacophore Modelling and Docking Techniques.

Manual Data Science Workflows: Automated

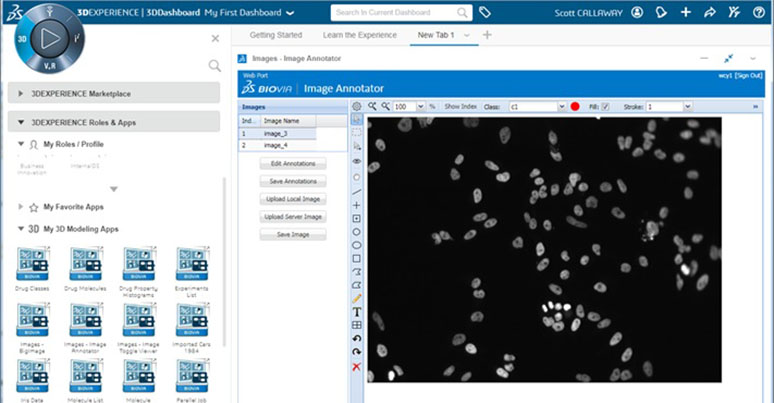

The magic of Pipeline Pilot lies in automating these processes. Schedule tasks, clean and blend datasets, and set up interactive dashboards, employing Molecular Docking Steps, ADMET Processes, and Protein-Protein Interaction analysis. Protocols can also be set up as web services, simplifying the end-user experience.

Enterprise R&D and Operations: Data-Driven

With Pipeline Pilot, protocols can be operationalized to optimize the use and reuse of data. Deploy a machine learning model via a web service. Create custom applications to manage internal IP, utilizing Computational Chemistry Applications like CHARMM and Accelrys. Thereby integrating your existing IT environment.

Support End-to-End Data Science Workflows

Pipeline Pilot supports end-to-end automated workflow creation and execution. Connect to internal and external data sources. Access and parse all kinds of scientific data. Prep data for analysis. Build, train, and maintain models, including QSAR Models and Ligand-Based Drug Design.